|

Navigation

Recherche

|

The Robot Olympics Are Here: PI’s π0.6 Robot Takes on Doors, Keys, and a Greasy Pan

lundi 29 décembre 2025, 16:49 , par eWeek

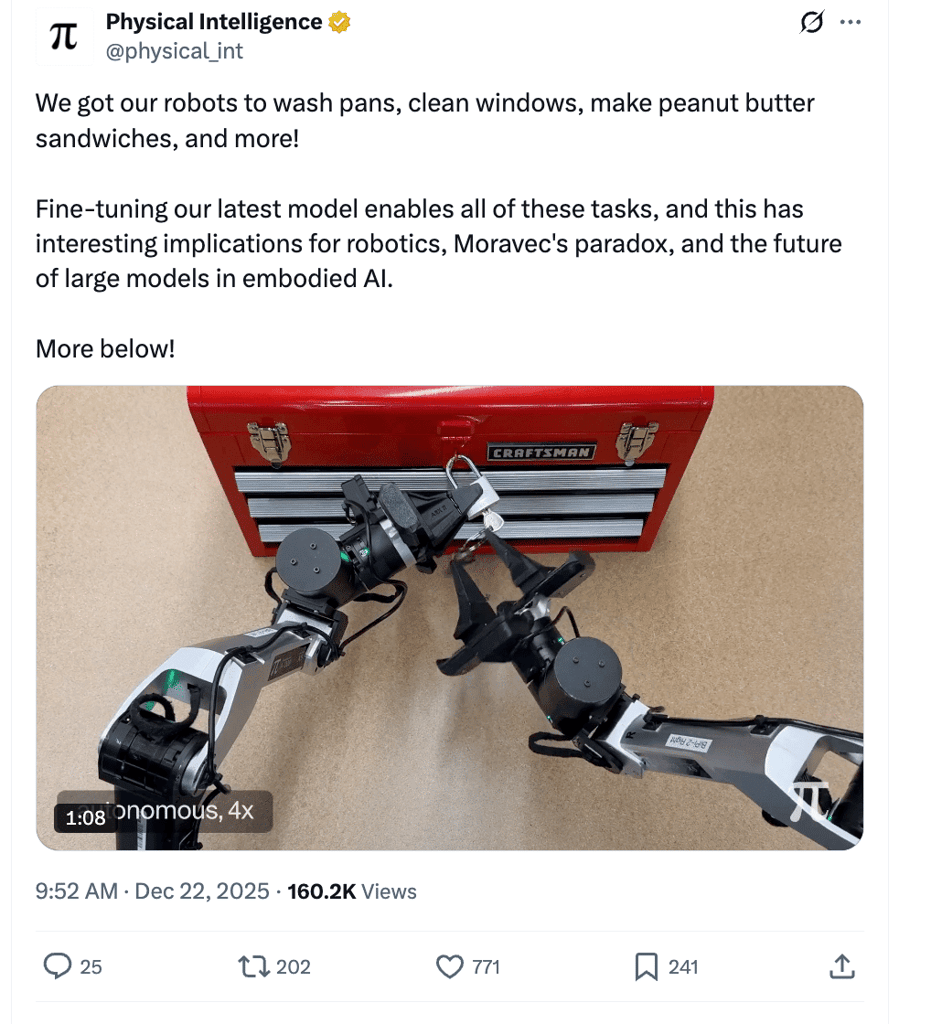

Robot does something flashy → everyone says “cool, now wash the dishes.” Robot tries to wash the dishes → everyone says “not like that.” Physical Intelligence decided to stop arguing in circles and turn the whole thing into a test. Their new Robot Olympics is basically the Olympics of household chores: same tasks, same constraints, lots of ways to fail, and a painfully honest scorecard for how close we actually are to useful robots. The whole “Olympics” framing was first pitched in Benjie Holson’s Humanoid Olympic Games (he literally sub-titled the post “gauntlet thrown”), and PI said that part out loud when it launched. Gauntlet accepted! Here’s what PI tested with its π0.6 “generalist” model (a vision-language-action policy — think “LLM for robots,” where vision + instructions turn into motor actions): Door entry: navigate a self-closing lever-handle door without getting caught by the door itself. Textiles: turn a sock right-side-out (and admit the gripper is too wide for shirt sleeves). Tool use: put a tiny key into a lock and turn it; aka “precision, torque, and no second chances.” Cleaning: wash a frying pan with soap and water like a real person who doesn’t want to live in filth. And of course, Deformables: open a thin plastic dog poop bag (which conveniently blinds wrist cameras at the worst possible time). PI says all videos are autonomous. This means the robot decomposes the task, makes contact-rich moves, and recovers when things go sideways, without a human nudging the sticks between cuts. Image: X Why this matters Because PI is trying to merge two worlds that usually don’t talk to each other: Benchmarks that look like real life (doors, doggy bags, laundry) instead of clean lab puzzles. Foundation-model scaling (train big once, then fine-tune for new tasks) instead of bespoke policies for every new object. That connects directly to PI’s latest research on human-to-robot transfer. The claim is that once you pretrain VLAs (vision language action model) on enough diverse robot experience, they start to “line up” human egocentric video with robot behavior in representation space. Then, you can teach robots from cheap human footage without a ton of explicit alignment tricks. In the paper, PI reports roughly ~2× improvements on a set of “human-only” generalization scenarios when adding human video during fine-tuning. That’s an early hint that the next data firehose for robots might not be more robot hours… but more humans living their lives on camera. Of course, there’s a big caveat “Impressive run” is not the same thing as “reliable product.” A few reality checks to keep your expectations from doing parkour: These are still brittle, contact-rich tasks where success can swing on lighting, object placement, or a slightly-too-wet sponge. Some failures are just hardware: a too-wide gripper loses the shirt-sleeve event no matter how smart the policy is. Benchmarks are the beginning, not the end — what matters is repeatability across many trials, in many kitchens. What to watch next: whether this “foundation model + fine-tune + real-life eval” loop starts to compound the way it did for language models. If it does, the practical timeline gets less sci-fi and more boringly inevitable. If you want the full technical thread in a clean format, PI’s write-up is mirrored as an arXiv HTML paper page. Editor’s note: This content originally ran in the newsletter of our sister publication, The Neuron. To read more from The Neuron, sign up for its newsletter here. The post The Robot Olympics Are Here: PI’s π0.6 Robot Takes on Doors, Keys, and a Greasy Pan appeared first on eWEEK.

https://www.eweek.com/news/robot-olympics-2025-neuron/

Voir aussi |

56 sources (32 en français)

Date Actuelle

lun. 29 déc. - 20:36 CET

|

MacMusic |

PcMusic |

440 Software |

440 Forums |

440TV |

Zicos

MacMusic |

PcMusic |

440 Software |

440 Forums |

440TV |

Zicos